I attended the 2016 Social Media Conference yet again this year. I think it was the best one I have attended, with each session giving me little tidbits of information about how to better manage and utilize my use of social media as a professional and in my classes. This is not to say that the conference can't be improved. I would like to see stronger session on leveraging some of the analytics available in some of the platforms as well as to see a track dedicated to academic research revolving around social media. But, from top to bottom, this year's conference was the best I have attended.

For my first session, I attended a session on "In Introduction to Social Network Analysis" bu Vivian Ta (@VivianTa22). A doctoral student from UTA, she presented last year and was back again. She described social network analysis as mapping and measuring the flow of information. It enables you to identify connectors, influencers, bridges, and isolates. The methodology allows you to track the spread of disease, sexual relationships, collaborators, business, law enforcement, etc. The value of social network analysis is that it allows you to identify data and patterns of information flow. There are two types of social network analyses: egocentric and socio-centric. Egocentric revolves around a single person to demonstrate economic success, depression, etc. Socio-centric social network analysis focuses on large groups to describe groups of people to give insight into concentration of power, spread of disease, group dynamics, etc. There are three types of measures used to analyze social network relationships (referred to as centrality measures): degrees, betweenness, and closeness. Degrees refers to the number of relationship connections each node has. Betweenness refers to nodes that link together multiple groups. They represent single points of failure and can be important linkages in terms of allowing information to travel between groups. Lastly, closeness refers to how quickly information can travel between nodes. As for actually gathering the data, you can utilize direct measures such as surveys but this can be time consuming and difficult. Indirect measures can come from organizations, citation analyses, co-authors, memberships in organizations etc. This is a cheaper approach but does not indicate how nodes are truly related to one another. Still another approach is to use data scraping, web harvesting, or data extraction techniques to pull data directly from user's social media accounts. For example, you can harvest likes, follows, friends, reply to's, retweets, comments, tags, etc. See OutWit Hub for an example of a data scraper. As for analyzing the data retrieved, there are several FREE platforms: NodeXL, Pajek, UCInet, NetDraw, Mag. e, Guess, R Packages for SNA, and Gephi. You can examine the frequency of interactions, types of interactions or flows, as well as the similarity of characteristics (status, location, educations, beliefs, etc.). This was a nice presentation that gave me a research idea so in my mind, it was excellent.

The second session I attended was titled "How to Use Social Media in Higher Education to Tell Your Program's Story" by Dr. Becker and Dr. Putman. The started off by citing Hoover, 2015 who found that interacting with current students and staff through social media is more effective that interacting with them face to face. They stressed the need to align social media efforts with strategic goals and that you need a dedicated social media person to handle efforts 10-15 hours per week. They used a work-study position for this which is funded mostly through the federal government, thus reducing the funding requirement on the part of the department. Start by developing strategies and goals and develop a consistent message across platforms. It would not be wise to have a very conservative message shared through one outlet and then a very wild approach on another. Develop a hashtag for your department. They noted that it is challenging to consistently post, create messages with audience appeal, and dedicate time of an employee. You should post photos with your messages as it leads to significantly more likes, comments, and click throughs. Short posts and pose a question also tend to be more effective. Finally, the best times of the day to post are around 9:00 AM and 4:45 PM so, 1-2 times per day. This was a good presentation, which gave me some good ideas for managing our own department's use of social media.

The third session was titled "Twitter for Educators - Network, Learn, Grow" by Yvonne Mulhern. She started off with an interesting quote from someone I did not catch: "Twitter makes me like people I've never met. Facebook makes me hate people I know." She talked about using Twitter for professional development, self-promotion, and the use of Twitter Lists. Follow people you admire, leaders in your field, etc for professional development. Tweet publications, research ideas, etc. Finally, follow other's Twitter Lists which enables you to more efficiently follow leaders in your field. Follow HigherEdJobs or Chronicles Jobs. The idea is to develop your own personal learning network (PLN). There are also several tools that enable you to streamline your use of Twitter: Twistori.com, TweetReports.com and do not forget to look at Twitter analytics.

The fourth session was presented by Dr. Goen and Dr. Stafford and was titled "Remaining Legal and Combating Trolls on Periscope, Facebook Live, and Meerkat". They limited the discussion of Meerkat given their recent demise. They first defined a troll as an individual that visits a page to post insulting, off-topic comments to provoke some sort of emotional response. In general, they identified two different types of trolls: spammer trolls and disruptive trolls. Spammer trolls are all about them, selling their stuff, etc. Disruptive trolls spew insults, often sexual, violent posts, etc. Regardless of the type of troll, there are ways to combat trolls. On Periscope, you can set a post to follow only which means only those who follow you may post a comment. All others can only watch. You can also block users on a live broadcast. This does not remove a negative comment but it does prevent them from making additional ones. Finally, you can set a broadcast to private which only enables those following you to make comments. There are similar tools on Facebook (FB). On FB, yo ucan report/block a person from your page. The report aspect provides FB with information about your objection and allows you to continue to view the other's account. If you simply block a user, you do not have to report anything but by doing so, you can no longer see the other's account. You can also adjust your general privacy settings to limit what others can see. Alternatively, you can customize each post to limit its exposure. Finally, you should only accept friend requests from people you know.

The final session was over YouTube by Dr. Mitzi Lewis. Titled "How to Make the Most of Your YouTube Channel", Dr. Lewis boiled it down to three essential areas: Brand Your Channel, Be Found, and Keep 'em Watching. For Branding Your Channel, know your mission and post videos that are directly relevant to it. If you need to deviate, consider adding another channel. Make sure your banner, name, and icon are consistent in terms of terms, colors, style, etc. You are trying to give off a consistent image. Have a good, relevant channel trailer that is, ideally, 30-60 seconds. Finally, include channel sections to groups similar/related videos. For Be Found, make sure your thumbnails are images that are relevant to the video content. She gave the example of Jimmy Kimmel videos in which then thumbnail is usually an image of the guest being interviewed. Make sure the titles use words that people will search for. Put the meat of your descriptions early in the narrative, so they can be seen without hitting "more." If you want to identify good key words to include, google.com/trends/explore can be helpful. Finally, keep tags short and meaningful. Finally, for the Keep 'em Watching group, Dr. Lewis suggested maximizing watching time by hooking them first and then let them watch. So, given them a tease, intro, and then discuss your topic. It is extremely important to hook them early. If you use playlists to organize related videos, limit the number of videos in the playlist to the teens or so. Use a watermark which will exist on all of your videos. Finally, regularly upload videos so subsribers have something to visit. For other helpful tips and tricks, she recommended creatoracademy.youtube.com.

This year's conference was better than years past. Again, I would like to see an academic research track included as well as some strong sessions on analytics. But, this is a nice little conference that is applicable to this in higher education, primary and secondary school, as well as businesses and other organizations. So, plan on attending next year!

Welcome to my blog, where I share my opinion on various topics related to technology and education.

Sunday, November 6, 2016

Thursday, November 3, 2016

Review: ISSA 2016 International Conference

As a new ISSA member as of late last year, I attended my first ISSA conference, conveniently located (for me) in Dallas Texas. Being used to academic conferences myself, I was not quite sure what to expect. I knew it was going to be more practitioner based and practitioner based it was...largely from a fairly managerial perspective. So, that was in my favor. So, here's a recap of the session I attended.

For me, the first day was the weaker of the two days but the first session of the day was the strongest. It was titled "Architecting Your Cyber-security Organization For Big Data, Mobile, Cloud, and Digital Innovation" by Mr. David Foote. He discussed the importance of aligning business and security objectives and that part of making sure that happens is having CISOs report to Boards of Directors rather than to CIOs. He argued that part of what makes managing so difficult is due to churn within the field. This is a result, he argued, of being spread too thin and burnout as experienced cyber-security specialists are constantly having to re-tune because of disruptive technologies such as Cloud, Big Data, IoT, etc.). According to his research, cyber-security jobs require more certifications than other IT jobs and that there are roughly only 1000 top level security experts compared to a need for 10,000 to 30,000. This brought him to the point that we are in deep need of "people architecture", an alignment of people, programs, practices, and technology. The benefit is an optimization of assets, improved decision making, minimizing unwanted circumstances, etc. Finally, Mr. Foote, discussed the need for consistent job titles and skills across organizations and industries. The lack of such a consistent job definition makes it hard to compare, for exam, a system administrator for one organization to a system administrator for another organization. Mr. Foote did an excellent job presenting and I would highly recommend attending other presentations he puts on.

The second session I attended was not quite as good but I did still come away with some good content. It was titled "Improving Incident Response Plan With Advanced Exercises" by Chris Evans. He stressed the need for "pre-incident" training in order to develop muscle memory. The goal is to stretch beyond just compliance. He described several ways of doing this: workshops, table top exercises, games, simulations, drills, and full scale exercises from least to most complex with the more complex yielding more tangible benefits but require more investment of time, resources, and expertise. The first step is to develop the objectives so that the people that need to participate can be identified. The key take away was that we need to evaluate > test > assess > drill.

The third session on day one was titled "Cyber Law Update". The presenter struggled on this one. She was neither a technical person nor a manager of technical people. She was, I believe, an insurance person. But, she found herself being corrected several times by the audience. Nevertheless, there was some good content to come out of the presentation. One of the key points was regarding the establishment of FTC authority as it relates to cyber-security breaches. She discussed LabMD who was not liable for the breach but rather, for the failure to take "reasonable" security measures. Another valuable contribution from this presentation was that the inability to show injury is what stops most law suits against companies from being successful. Emotional distress does not count. You must show some sort of physical injury. If you cannot show what or how much you lost, you have no case.

The forth session of the day was titled " Posture Makes Perfect: Cyber Residual Risk Scoring". This one was interesting. The presenter was a little unclear in terms of specifics on his scoring model but the general idea was a calculation that gave you a residual which represented risk. He briefly mentioned threat maps and displayed one by Kaspersky and mentioned the Norse Map. I have seen these before but never spent much time looking at them. Having said that, in looking through my notes for this blog post, I Googled them and ran across a site that lists both of these as well as several others. These are pretty slick and can be interesting and compelling when trying to discuss how pervasive security issues are. He also reference the over referenced (his words) Sun Tzu's quote about know your enemy, know yourself, ... While he made the argument through the process he was advocating that you could know your enemy, he started off stating that given the complex threat environment, that you could not know your enemy. This seemed more realistic to me. There are nation states, organized crime, hacktivists, cyber criminals, etc. This make make it seemingly impossible to know your enemy with certainty, at least without a delay to properly investigate. There are just too many possibilities. But, it does suggest that we need to develop methods to more quickly identify these sources so that we can more adequately combat threats. He finished up talking about there being lots of standards and lots of certifications that demonstrate or express proficiency as it relates to assessing, developing, and implementing security in organizations. Despite all of this, breaches continue to occur. Touche!

There was a fifth session for the day but I had to leave. Day two was really pretty solid. All the session were quite good I would say. For my first session on day two, I attended Advances in Security Risk Assessments". Presented by Mr. Doug Landoll, he started with an Einstein quote: "We cannot solve our problems with the same thinking we used when we created them." He talked about the threat calculation, which ever one you use, needs some sort of data. You can get that data from many different places. This may be as simply as a survey; "Do you have a firewall in place?" ... He stated that CISO's are in high demand and that if you examine job requirements on job posting sites, the requirements can all be boiled down to "reducing risk." In order to determine risk, the process for determining a risk score is important. You have to examine controls that are in place. For example, what is the hiring process like? You need to establish physical and logical boundaries to your assessment. You also need to apply a legitimate framework. In his opinion, some "frameworks" are not frameworks but are really just a collection of a few best practices (i.e. SOX, HIPPA, PCI, etc.). Legitimate frameworks include COBIT, NIST, ISO 27001, Cyber-Security Framework, FISMA, etc. With a framework identified, you need to have it mapped (hopefully it is already mapped by a good source) to a standard such as PCI. His point here was that standards and regulations are not frameworks. He then pointed to an article he published on LinkedIn. For assessment, he mentioned RIIOT: review documents, inspect, interview, and observe. Combine multiple approaches. To do good assessment, you need objectivity, expertise, and quality data. Finally, he plugged another person's book on quantitative assessment (Doug Hubbard). Follow this presenter on LinkedIn.

The second presentation on day two was titled "Culture Changes, Communicating Cyber Risk in Business Terms." One of the panelists stated that technology was similar to dog years, referring to the speed of change. The concept of nation states launching cyber attacks is recent. attack surfaces have mushroomed. It was also pointed out that the boundary of the enterprise is becoming harder to define as we rely more and BYOD devices, cloud services, etc. When asked about some of the recent drivers of culture change, the data breach at the Office of Personnel Management was brought up as was the Mirai DDoS attack and Dewall. The interesting thing about this last one was they were held responsible, not for a data breach, but rather for claiming through advertising that their systems were more secure than they actually were. Another example of driving a culture of change was ransom-ware and the interaction between victims and hackers. The panel concluded by discussing some of the existing standards (NIST, ISO 27005, etc.) and the focus on IT security risk and that we need to refocus on enterprise risk instead. I read into this an alignment of security and business objectives.

The third session I attended for the day was titled "Stepwise Security - A Planned Path to Reducing Risk" by Wade Tongen. He described the "de-perimeterization" of organizations and how that makes securing them difficult. Per the 2016 Verizon Data Breach Report, 63% of breaches occur as a result of weak, default, or stolen passwords. He mentioned the need for identity assurance because users have multiple identities (i.e. personal, professional, privileged, non-privileged). There is a need for consolidated identities. Fragmented identities result in sticky notes, use of same password for multiple systems, spreadsheets, etc. Use multifactor authentication EVERYWHERE. Organizations need role based provisioning so that applications, services, licenses etc. are all associated with a role so that when that role changes, access changes accordingly. Finally, the speed with which we can identify perpetrators, maximizes the chance of being able to do something about it. He used a convenience store robbery as an example. If it is robbed and you can give the police and accurate description quickly, they are more likely to be able to do something about it than if you can't provide them with evidence (such as video surveillance) for several days.

The final session I attended on day 2 was over Mr. Robot and whether or not it was an accurate depiction of a hacker's perspective. A panel session, the consensus was that it was. I left this session as I did not really see much value in the discussion. Overall, it was a good experience. It was new to me. As I mentioned, I am used to academic conferences. But, this was a nice conference to attend; one that I can do some further research about some of these concepts and take bake and use in my classes.

#BCIS5304 #BCIS3347 #ISSEConf

For me, the first day was the weaker of the two days but the first session of the day was the strongest. It was titled "Architecting Your Cyber-security Organization For Big Data, Mobile, Cloud, and Digital Innovation" by Mr. David Foote. He discussed the importance of aligning business and security objectives and that part of making sure that happens is having CISOs report to Boards of Directors rather than to CIOs. He argued that part of what makes managing so difficult is due to churn within the field. This is a result, he argued, of being spread too thin and burnout as experienced cyber-security specialists are constantly having to re-tune because of disruptive technologies such as Cloud, Big Data, IoT, etc.). According to his research, cyber-security jobs require more certifications than other IT jobs and that there are roughly only 1000 top level security experts compared to a need for 10,000 to 30,000. This brought him to the point that we are in deep need of "people architecture", an alignment of people, programs, practices, and technology. The benefit is an optimization of assets, improved decision making, minimizing unwanted circumstances, etc. Finally, Mr. Foote, discussed the need for consistent job titles and skills across organizations and industries. The lack of such a consistent job definition makes it hard to compare, for exam, a system administrator for one organization to a system administrator for another organization. Mr. Foote did an excellent job presenting and I would highly recommend attending other presentations he puts on.

The second session I attended was not quite as good but I did still come away with some good content. It was titled "Improving Incident Response Plan With Advanced Exercises" by Chris Evans. He stressed the need for "pre-incident" training in order to develop muscle memory. The goal is to stretch beyond just compliance. He described several ways of doing this: workshops, table top exercises, games, simulations, drills, and full scale exercises from least to most complex with the more complex yielding more tangible benefits but require more investment of time, resources, and expertise. The first step is to develop the objectives so that the people that need to participate can be identified. The key take away was that we need to evaluate > test > assess > drill.

The third session on day one was titled "Cyber Law Update". The presenter struggled on this one. She was neither a technical person nor a manager of technical people. She was, I believe, an insurance person. But, she found herself being corrected several times by the audience. Nevertheless, there was some good content to come out of the presentation. One of the key points was regarding the establishment of FTC authority as it relates to cyber-security breaches. She discussed LabMD who was not liable for the breach but rather, for the failure to take "reasonable" security measures. Another valuable contribution from this presentation was that the inability to show injury is what stops most law suits against companies from being successful. Emotional distress does not count. You must show some sort of physical injury. If you cannot show what or how much you lost, you have no case.

The forth session of the day was titled " Posture Makes Perfect: Cyber Residual Risk Scoring". This one was interesting. The presenter was a little unclear in terms of specifics on his scoring model but the general idea was a calculation that gave you a residual which represented risk. He briefly mentioned threat maps and displayed one by Kaspersky and mentioned the Norse Map. I have seen these before but never spent much time looking at them. Having said that, in looking through my notes for this blog post, I Googled them and ran across a site that lists both of these as well as several others. These are pretty slick and can be interesting and compelling when trying to discuss how pervasive security issues are. He also reference the over referenced (his words) Sun Tzu's quote about know your enemy, know yourself, ... While he made the argument through the process he was advocating that you could know your enemy, he started off stating that given the complex threat environment, that you could not know your enemy. This seemed more realistic to me. There are nation states, organized crime, hacktivists, cyber criminals, etc. This make make it seemingly impossible to know your enemy with certainty, at least without a delay to properly investigate. There are just too many possibilities. But, it does suggest that we need to develop methods to more quickly identify these sources so that we can more adequately combat threats. He finished up talking about there being lots of standards and lots of certifications that demonstrate or express proficiency as it relates to assessing, developing, and implementing security in organizations. Despite all of this, breaches continue to occur. Touche!

There was a fifth session for the day but I had to leave. Day two was really pretty solid. All the session were quite good I would say. For my first session on day two, I attended Advances in Security Risk Assessments". Presented by Mr. Doug Landoll, he started with an Einstein quote: "We cannot solve our problems with the same thinking we used when we created them." He talked about the threat calculation, which ever one you use, needs some sort of data. You can get that data from many different places. This may be as simply as a survey; "Do you have a firewall in place?" ... He stated that CISO's are in high demand and that if you examine job requirements on job posting sites, the requirements can all be boiled down to "reducing risk." In order to determine risk, the process for determining a risk score is important. You have to examine controls that are in place. For example, what is the hiring process like? You need to establish physical and logical boundaries to your assessment. You also need to apply a legitimate framework. In his opinion, some "frameworks" are not frameworks but are really just a collection of a few best practices (i.e. SOX, HIPPA, PCI, etc.). Legitimate frameworks include COBIT, NIST, ISO 27001, Cyber-Security Framework, FISMA, etc. With a framework identified, you need to have it mapped (hopefully it is already mapped by a good source) to a standard such as PCI. His point here was that standards and regulations are not frameworks. He then pointed to an article he published on LinkedIn. For assessment, he mentioned RIIOT: review documents, inspect, interview, and observe. Combine multiple approaches. To do good assessment, you need objectivity, expertise, and quality data. Finally, he plugged another person's book on quantitative assessment (Doug Hubbard). Follow this presenter on LinkedIn.

The second presentation on day two was titled "Culture Changes, Communicating Cyber Risk in Business Terms." One of the panelists stated that technology was similar to dog years, referring to the speed of change. The concept of nation states launching cyber attacks is recent. attack surfaces have mushroomed. It was also pointed out that the boundary of the enterprise is becoming harder to define as we rely more and BYOD devices, cloud services, etc. When asked about some of the recent drivers of culture change, the data breach at the Office of Personnel Management was brought up as was the Mirai DDoS attack and Dewall. The interesting thing about this last one was they were held responsible, not for a data breach, but rather for claiming through advertising that their systems were more secure than they actually were. Another example of driving a culture of change was ransom-ware and the interaction between victims and hackers. The panel concluded by discussing some of the existing standards (NIST, ISO 27005, etc.) and the focus on IT security risk and that we need to refocus on enterprise risk instead. I read into this an alignment of security and business objectives.

The third session I attended for the day was titled "Stepwise Security - A Planned Path to Reducing Risk" by Wade Tongen. He described the "de-perimeterization" of organizations and how that makes securing them difficult. Per the 2016 Verizon Data Breach Report, 63% of breaches occur as a result of weak, default, or stolen passwords. He mentioned the need for identity assurance because users have multiple identities (i.e. personal, professional, privileged, non-privileged). There is a need for consolidated identities. Fragmented identities result in sticky notes, use of same password for multiple systems, spreadsheets, etc. Use multifactor authentication EVERYWHERE. Organizations need role based provisioning so that applications, services, licenses etc. are all associated with a role so that when that role changes, access changes accordingly. Finally, the speed with which we can identify perpetrators, maximizes the chance of being able to do something about it. He used a convenience store robbery as an example. If it is robbed and you can give the police and accurate description quickly, they are more likely to be able to do something about it than if you can't provide them with evidence (such as video surveillance) for several days.

The final session I attended on day 2 was over Mr. Robot and whether or not it was an accurate depiction of a hacker's perspective. A panel session, the consensus was that it was. I left this session as I did not really see much value in the discussion. Overall, it was a good experience. It was new to me. As I mentioned, I am used to academic conferences. But, this was a nice conference to attend; one that I can do some further research about some of these concepts and take bake and use in my classes.

#BCIS5304 #BCIS3347 #ISSEConf

Tuesday, September 13, 2016

Review: Tarleton School of Criminology Cybersecurity 2016 Summit

Today, I attended the Tarleton School of Criminology Cybersecurity 2016 Summit held at the George W. Bush Presidential Library. I have to say that it was extremely well done. The various speakers brought forth an upbeat, hopeful message about the future of cybersecurity. With presentations from former military, law enforcement, lawyers, professors, and consultants, it was packed full of useful information.

One of the nice little nuggets I ran across was the concept of the "kill chain" presented by Col Jeff Schilling (ret). Naturally, this came from one of the former military presenters. A quick Google and the meaning behind it was clear and the extension to the field of cybersecurity could be easily extrapolated. Essentially, the kill chain is all the steps necessary to successfully eliminate a target. As it relates to cybersecurity, it would simply be all the steps necessary to compromise a target (read data). Remove any step in the kill chain and the objective of compromise cannot be completed. Now, here's the kicker. As security professionals, you can attack the kill chain at each and every stage OR you can focus your efforts on a single stage. Basically, this was the argument made today. The point is not to ignore all the other stages. Patching is still important. So it user education. But focus on the data. Focus on the prize.

The thought process behind this is that if we spread our focus on all steps in the kill chain, it divides our focus and we become less effective. As long as we do not lose sight on our most valuable asset, we can focus our efforts where we can be most efficient and effective.

We also had presentations from the Secret Service as well as the FBI (no photos or names please).

Our keynote during lunch was Mr. Brian Sartin, Managing Director Verizon RISK Services over Verizon's 2016 data breach report. No surprises here. Same thing as last year. Threats continue to grow, particularly as they relate to nation state hacking/espionage.

Then we had Candy Heath, AUSA and Lead Cyber Attorney for the United States Northern District of Texas confirm some of the keynote speaker's findings that the vast majority of those compromised don't know it. Rather, one a perpetrator's is taken into custody and their systems are evaluated, numerous targets (sometimes dozens to hundreds) are discovered. This explains the concept of why it takes so long for organizations to discover that a breach has occurred. They are not discovering the breach. Others are...usually about 9 months after the breach originates.

Then a panel discussion over the state of educating security professionals and meeting the needs of employers occurred. The consensus was that we have a lot of work to do. Security professionals are very mobile. They can afford to be. They have highly sought after skills and few competitors. Depending on the presenter, there is a roughly 200,000 person shortage in this country. And, they predicted the problem would get worse. They also recognized the even within the general field of security, that there are specializations and the sometimes, an organization has security specialists, just not the specific ones they need.

Shawn Tuma, another attorney spoke about representing organizations that were the target of cyber attacks and noted the importance of simply have procedures in place and following them. Failure to protect data in and of itself is not the problem. But, failing to take reasonable precautions and not following established procedures opens up an organization to liability.

The second to last speaker was Chuck Easttom, computer scientist and author. He teaches, consults, testifies (prosecution and defense), etc. Bright guy. Would love to take some of his classes. He outlined an incident response template. The big take away from that was about verifying the credentials of the forensic expert. By that, he meant actually verify. Do not just take their word for it. Make sure they do the work and/or that they supervise those who do.

Lastly, Randell Casey, retired from the Army gave a great presentation. The take away there was "where are your electricians?" His point was that at the turn of the century in 1900, electricity was new. The government and large organizations had electricians on staff. When they needed new lines or a problem fixed, they just got their electricians to do it. Today, we simply expect to be about to flip a switch and for everything to work. If we need an electrician, we outsource it. His point was that security is moving in that direction. As things become more virtualized, more cloud based, organizations should shift to what they do best and leave the commoditization of infrastructure and security to professionals. But, he also restated the issue regarding the limited number of professionals on the market. His point here was was as the government continues to develop and employee many of those with these specialties, that as organizations start to wake up and truly understand how wide spread this issue truly is, that the shortage today could grow substantially before market forces can begin to correct the situation.

Now, I do not know if this was a one off event. I hope not. It was extremely well done. All the speakers were top notch from beginning to end. It has me rethinking our CIS programs at Tarleton to see how we might be able to collaborate with the CJ folks in order to generate some synergies. This was exciting, cool stuff.

Media Relations - Tarleton State University:

'via Blog this'

One of the nice little nuggets I ran across was the concept of the "kill chain" presented by Col Jeff Schilling (ret). Naturally, this came from one of the former military presenters. A quick Google and the meaning behind it was clear and the extension to the field of cybersecurity could be easily extrapolated. Essentially, the kill chain is all the steps necessary to successfully eliminate a target. As it relates to cybersecurity, it would simply be all the steps necessary to compromise a target (read data). Remove any step in the kill chain and the objective of compromise cannot be completed. Now, here's the kicker. As security professionals, you can attack the kill chain at each and every stage OR you can focus your efforts on a single stage. Basically, this was the argument made today. The point is not to ignore all the other stages. Patching is still important. So it user education. But focus on the data. Focus on the prize.

The thought process behind this is that if we spread our focus on all steps in the kill chain, it divides our focus and we become less effective. As long as we do not lose sight on our most valuable asset, we can focus our efforts where we can be most efficient and effective.

We also had presentations from the Secret Service as well as the FBI (no photos or names please).

Our keynote during lunch was Mr. Brian Sartin, Managing Director Verizon RISK Services over Verizon's 2016 data breach report. No surprises here. Same thing as last year. Threats continue to grow, particularly as they relate to nation state hacking/espionage.

Then we had Candy Heath, AUSA and Lead Cyber Attorney for the United States Northern District of Texas confirm some of the keynote speaker's findings that the vast majority of those compromised don't know it. Rather, one a perpetrator's is taken into custody and their systems are evaluated, numerous targets (sometimes dozens to hundreds) are discovered. This explains the concept of why it takes so long for organizations to discover that a breach has occurred. They are not discovering the breach. Others are...usually about 9 months after the breach originates.

Then a panel discussion over the state of educating security professionals and meeting the needs of employers occurred. The consensus was that we have a lot of work to do. Security professionals are very mobile. They can afford to be. They have highly sought after skills and few competitors. Depending on the presenter, there is a roughly 200,000 person shortage in this country. And, they predicted the problem would get worse. They also recognized the even within the general field of security, that there are specializations and the sometimes, an organization has security specialists, just not the specific ones they need.

Shawn Tuma, another attorney spoke about representing organizations that were the target of cyber attacks and noted the importance of simply have procedures in place and following them. Failure to protect data in and of itself is not the problem. But, failing to take reasonable precautions and not following established procedures opens up an organization to liability.

The second to last speaker was Chuck Easttom, computer scientist and author. He teaches, consults, testifies (prosecution and defense), etc. Bright guy. Would love to take some of his classes. He outlined an incident response template. The big take away from that was about verifying the credentials of the forensic expert. By that, he meant actually verify. Do not just take their word for it. Make sure they do the work and/or that they supervise those who do.

Lastly, Randell Casey, retired from the Army gave a great presentation. The take away there was "where are your electricians?" His point was that at the turn of the century in 1900, electricity was new. The government and large organizations had electricians on staff. When they needed new lines or a problem fixed, they just got their electricians to do it. Today, we simply expect to be about to flip a switch and for everything to work. If we need an electrician, we outsource it. His point was that security is moving in that direction. As things become more virtualized, more cloud based, organizations should shift to what they do best and leave the commoditization of infrastructure and security to professionals. But, he also restated the issue regarding the limited number of professionals on the market. His point here was was as the government continues to develop and employee many of those with these specialties, that as organizations start to wake up and truly understand how wide spread this issue truly is, that the shortage today could grow substantially before market forces can begin to correct the situation.

Now, I do not know if this was a one off event. I hope not. It was extremely well done. All the speakers were top notch from beginning to end. It has me rethinking our CIS programs at Tarleton to see how we might be able to collaborate with the CJ folks in order to generate some synergies. This was exciting, cool stuff.

Media Relations - Tarleton State University:

'via Blog this'

Wednesday, July 20, 2016

Security Theories

Computer Anxiety: "Excessive timidity in using computers, negative comments against computers and information science, attempts to reduce the amount of time spent using computers, and even the avoidance of using computersfrom the place where they are located" (Doronina, 1995).

General Deterrence Theory:

Protection Motivation Theory:

- Doronina, O. "Fear of Computers: Its Nature, Prevention and Cure," Russian Social Science Review (36:4) 1995, pp 79-90.

General Deterrence Theory:

- BOSS SR, KIRSCH LJ, ANGERMEIER I, SHINGLER RA and BOSS RW (2009) If Someone Is Watching, I’ll Do What I’m Asked: Mandatoriness, Control, and Information Security. European Journal of Information Systems 18(2), 151–164.

- STRAUB DW and WELKE RJ (1998) Coping With Systems Risk: Security Planning Models for Management Decision Making. MIS Quarterly 22(4), 441–469.

- D’ARCY J and HOVAV A (2009) Does One Size Fit All? Examining the Differential Effects of IS Security Countermeasures. Journal of Business Ethics 89, 59–71.

- HERATH T and RAO HR (2009) Protection Motivation and Deterrence: A Framework for Security Policy Compliance in Organisations. European Journal of Information Systems 18(2), 106–125.

- PAHNILA S, SIPONEN M and MAHMOOD A (2007) Employees’ Behavior towards IS Security Policy Compliance. In: 40th Annual Hawaii International Conference on System Sciences. Waikoloa, HI: IEEE Computer Society.

- STRAUB DW (1990) Effective IS Security: An Empirical Study. Information Systems Research 1(3), 255–276.

Protection Motivation Theory:

- Rogers, R.W. (1975). A protection motivation theory of fear appeals and attitude change. Journal of Psychology, 91, 93-114.

- Rogers, R.W. (1983). Cognitive and physiological processes in fear appeals and attitude change: A revised theory of protection motivation. In J. Cacioppo & R. Petty (Eds.), Social psychophysiology (pp. 153-176). New York: Guilford.

- MADDUX JE and ROGERS RW (1983) Protection Motivation and Self-Efficacy: A Revised Theory of Fear Appeals and Attitude Change. Journal of Experimental Social Psychology 19(5), 469– 479.

- CROSSLER RE (2010) Protection Motivation Theory: Understanding Determinants to Backing Up Personal Data. In: 43rd Hawaii International Conference on System Sciences. pp. 1–10.

- HERATH T and RAO HR (2009) Protection Motivation and Deterrence: A Framework for Security Policy Compliance in Organisations. European Journal of Information Systems 18(2), 106–125.

- JOHNSTON AC and WARKENTIN M (2010) Fear Appeals and Information Security Behaviors: An Empirical Study. MIS Quarterly 34(3), 549–A4.

- PAHNILA S, SIPONEN M and MAHMOOD A (2007) Employees’ Behavior towards IS Security Policy Compliance. In: 40th Annual Hawaii International Conference on System Sciences. Waikoloa, HI: IEEE Computer Society.

- VANCE A, SIPONEN M and PAHNILA S (2012) Motivating IS Security Compliance: Insights from Habit and Protection Motivation Theory. Information & Management 49(3–4), 190–198.

- WOON I, TAN G-W and LOW R (2005) A Protection Motivation Theory Approach to Home Wireless Security. In: Proceedings of the 26th International Conference on Information Systems. pp. 367–380.

- LEE Y and LARSEN KR (2009) Threat or Coping Appraisal: Determinants of SMB Executives’ Decision to Adopt Anti-Malware Software. European Journal of Information Systems 18(2), 177–187.

Technology Threat Avoidance Theory (TTAT): Posits that threat avoidance behavior functions as a dynamic positive feedback loop (concept

derived from cybernetic theory, and general systems theory) composed of two cognitive processes,

threat and coping appraisals, which determine how an individual would cope with IT threats.

- LIANG H and XUE Y (2009) Avoidance of Information Technology Threats: A Theoretical Perspective. MIS Quarterly 33(1), 71–90.

- LIANG H and XUE Y (2010) Understanding Security Behaviors in Personal Computer Usage: A Threat Avoidance Perspective. Journal of the Association for Information Systems 11(7), 394– 413.

Fear Appeal Theory

- JOHNSTON AC and WARKENTIN M (2010) Fear Appeals and Information Security

- Behaviors: An Empirical Study. MIS Quarterly 34(3), 549–A4.

Technology Anxiety (As a predictor of technology adoption): An individual's tendency to be uneasy, aprehensive, or fearful about the current or future use of technology ((Parasuraman, et al,m 1990; Allen, 2002).

- Allen, J.W., and Parikh, M.A. "The Impact of Personal Traits on IT Adoption," Proceedings of the 8th Americas Conference on Information Systems, Dallas, TX, USA, 2002.

- Meuter, M.L., Ostrom, A.L., Bitner, M.J., and Rountree, R. "The Influence of Technology Anxiety on Consumer Use and Experiences with Self-Service Technologies," Journal of Business Research (56) 2003, pp 899-906.

- Parasuraman, S., and Igbaria, M. "An Examination of Gender Differences in the Determinants of Computer Anxiety and Attitudes Towards Microcomputers Among Managers," International Journal of Man-Machine Studies (32:3) 1990, pp 327-340.

Security Theories

Computer Anxiety: "Excessive timidity in using computers, negative comments against computers and information science, attempts to reduce the amount of time spent using computers, and even the avoidance of using computersfrom the place where they are located" (Doronina, 1995).

General Deterrence Theory:

Protection Motivation Theory:

- Doronina, O. "Fear of Computers: Its Nature, Prevention and Cure," Russian Social Science Review (36:4) 1995, pp 79-90.

General Deterrence Theory:

- BOSS SR, KIRSCH LJ, ANGERMEIER I, SHINGLER RA and BOSS RW (2009) If Someone Is Watching, I’ll Do What I’m Asked: Mandatoriness, Control, and Information Security. European Journal of Information Systems 18(2), 151–164.

- STRAUB DW and WELKE RJ (1998) Coping With Systems Risk: Security Planning Models for Management Decision Making. MIS Quarterly 22(4), 441–469.

- D’ARCY J and HOVAV A (2009) Does One Size Fit All? Examining the Differential Effects of IS Security Countermeasures. Journal of Business Ethics 89, 59–71.

- HERATH T and RAO HR (2009) Protection Motivation and Deterrence: A Framework for Security Policy Compliance in Organisations. European Journal of Information Systems 18(2), 106–125.

- PAHNILA S, SIPONEN M and MAHMOOD A (2007) Employees’ Behavior towards IS Security Policy Compliance. In: 40th Annual Hawaii International Conference on System Sciences. Waikoloa, HI: IEEE Computer Society.

- STRAUB DW (1990) Effective IS Security: An Empirical Study. Information Systems Research 1(3), 255–276.

Protection Motivation Theory:

- Rogers, R.W. (1975). A protection motivation theory of fear appeals and attitude change. Journal of Psychology, 91, 93-114.

- Rogers, R.W. (1983). Cognitive and physiological processes in fear appeals and attitude change: A revised theory of protection motivation. In J. Cacioppo & R. Petty (Eds.), Social psychophysiology (pp. 153-176). New York: Guilford.

- MADDUX JE and ROGERS RW (1983) Protection Motivation and Self-Efficacy: A Revised Theory of Fear Appeals and Attitude Change. Journal of Experimental Social Psychology 19(5), 469– 479.

- CROSSLER RE (2010) Protection Motivation Theory: Understanding Determinants to Backing Up Personal Data. In: 43rd Hawaii International Conference on System Sciences. pp. 1–10.

- HERATH T and RAO HR (2009) Protection Motivation and Deterrence: A Framework for Security Policy Compliance in Organisations. European Journal of Information Systems 18(2), 106–125.

- JOHNSTON AC and WARKENTIN M (2010) Fear Appeals and Information Security Behaviors: An Empirical Study. MIS Quarterly 34(3), 549–A4.

- PAHNILA S, SIPONEN M and MAHMOOD A (2007) Employees’ Behavior towards IS Security Policy Compliance. In: 40th Annual Hawaii International Conference on System Sciences. Waikoloa, HI: IEEE Computer Society.

- VANCE A, SIPONEN M and PAHNILA S (2012) Motivating IS Security Compliance: Insights from Habit and Protection Motivation Theory. Information & Management 49(3–4), 190–198.

- WOON I, TAN G-W and LOW R (2005) A Protection Motivation Theory Approach to Home Wireless Security. In: Proceedings of the 26th International Conference on Information Systems. pp. 367–380.

- LEE Y and LARSEN KR (2009) Threat or Coping Appraisal: Determinants of SMB Executives’ Decision to Adopt Anti-Malware Software. European Journal of Information Systems 18(2), 177–187.

Technology Threat Avoidance Theory (TTAT): Posits that threat avoidance behavior functions as a dynamic positive feedback loop (concept

derived from cybernetic theory, and general systems theory) composed of two cognitive processes,

threat and coping appraisals, which determine how an individual would cope with IT threats.

- LIANG H and XUE Y (2009) Avoidance of Information Technology Threats: A Theoretical Perspective. MIS Quarterly 33(1), 71–90.

- LIANG H and XUE Y (2010) Understanding Security Behaviors in Personal Computer Usage: A Threat Avoidance Perspective. Journal of the Association for Information Systems 11(7), 394– 413.

Fear Appeal Theory

- JOHNSTON AC and WARKENTIN M (2010) Fear Appeals and Information Security

- Behaviors: An Empirical Study. MIS Quarterly 34(3), 549–A4.

Technology Anxiety (As a predictor of technology adoption): An individual's tendency to be uneasy, aprehensive, or fearful about the current or future use of technology ((Parasuraman, et al,m 1990; Allen, 2002).

- Allen, J.W., and Parikh, M.A. "The Impact of Personal Traits on IT Adoption," Proceedings of the 8th Americas Conference on Information Systems, Dallas, TX, USA, 2002.

- Meuter, M.L., Ostrom, A.L., Bitner, M.J., and Rountree, R. "The Influence of Technology Anxiety on Consumer Use and Experiences with Self-Service Technologies," Journal of Business Research (56) 2003, pp 899-906.

- Parasuraman, S., and Igbaria, M. "An Examination of Gender Differences in the Determinants of Computer Anxiety and Attitudes Towards Microcomputers Among Managers," International Journal of Man-Machine Studies (32:3) 1990, pp 327-340.

Security Theories

Computer Anxiety: "Excessive timidity in using computers, negative comments against computers and information science, attempts to reduce the amount of time spent using computers, and even the avoidance of using computersfrom the place where they are located" (Doronina, 1995).

General Deterrence Theory:

Protection Motivation Theory:

- Doronina, O. "Fear of Computers: Its Nature, Prevention and Cure," Russian Social Science Review (36:4) 1995, pp 79-90.

General Deterrence Theory:

- BOSS SR, KIRSCH LJ, ANGERMEIER I, SHINGLER RA and BOSS RW (2009) If Someone Is Watching, I’ll Do What I’m Asked: Mandatoriness, Control, and Information Security. European Journal of Information Systems 18(2), 151–164.

- STRAUB DW and WELKE RJ (1998) Coping With Systems Risk: Security Planning Models for Management Decision Making. MIS Quarterly 22(4), 441–469.

- D’ARCY J and HOVAV A (2009) Does One Size Fit All? Examining the Differential Effects of IS Security Countermeasures. Journal of Business Ethics 89, 59–71.

- HERATH T and RAO HR (2009) Protection Motivation and Deterrence: A Framework for Security Policy Compliance in Organisations. European Journal of Information Systems 18(2), 106–125.

- PAHNILA S, SIPONEN M and MAHMOOD A (2007) Employees’ Behavior towards IS Security Policy Compliance. In: 40th Annual Hawaii International Conference on System Sciences. Waikoloa, HI: IEEE Computer Society.

- STRAUB DW (1990) Effective IS Security: An Empirical Study. Information Systems Research 1(3), 255–276.

Protection Motivation Theory:

- Rogers, R.W. (1975). A protection motivation theory of fear appeals and attitude change. Journal of Psychology, 91, 93-114.

- Rogers, R.W. (1983). Cognitive and physiological processes in fear appeals and attitude change: A revised theory of protection motivation. In J. Cacioppo & R. Petty (Eds.), Social psychophysiology (pp. 153-176). New York: Guilford.

- MADDUX JE and ROGERS RW (1983) Protection Motivation and Self-Efficacy: A Revised Theory of Fear Appeals and Attitude Change. Journal of Experimental Social Psychology 19(5), 469– 479.

- CROSSLER RE (2010) Protection Motivation Theory: Understanding Determinants to Backing Up Personal Data. In: 43rd Hawaii International Conference on System Sciences. pp. 1–10.

- HERATH T and RAO HR (2009) Protection Motivation and Deterrence: A Framework for Security Policy Compliance in Organisations. European Journal of Information Systems 18(2), 106–125.

- JOHNSTON AC and WARKENTIN M (2010) Fear Appeals and Information Security Behaviors: An Empirical Study. MIS Quarterly 34(3), 549–A4.

- PAHNILA S, SIPONEN M and MAHMOOD A (2007) Employees’ Behavior towards IS Security Policy Compliance. In: 40th Annual Hawaii International Conference on System Sciences. Waikoloa, HI: IEEE Computer Society.

- VANCE A, SIPONEN M and PAHNILA S (2012) Motivating IS Security Compliance: Insights from Habit and Protection Motivation Theory. Information & Management 49(3–4), 190–198.

- WOON I, TAN G-W and LOW R (2005) A Protection Motivation Theory Approach to Home Wireless Security. In: Proceedings of the 26th International Conference on Information Systems. pp. 367–380.

- LEE Y and LARSEN KR (2009) Threat or Coping Appraisal: Determinants of SMB Executives’ Decision to Adopt Anti-Malware Software. European Journal of Information Systems 18(2), 177–187.

Technology Threat Avoidance Theory (TTAT): Posits that threat avoidance behavior functions as a dynamic positive feedback loop (concept

derived from cybernetic theory, and general systems theory) composed of two cognitive processes,

threat and coping appraisals, which determine how an individual would cope with IT threats.

- LIANG H and XUE Y (2009) Avoidance of Information Technology Threats: A Theoretical Perspective. MIS Quarterly 33(1), 71–90.

- LIANG H and XUE Y (2010) Understanding Security Behaviors in Personal Computer Usage: A Threat Avoidance Perspective. Journal of the Association for Information Systems 11(7), 394– 413.

Fear Appeal Theory

- JOHNSTON AC and WARKENTIN M (2010) Fear Appeals and Information Security

- Behaviors: An Empirical Study. MIS Quarterly 34(3), 549–A4.

Technology Anxiety (As a predictor of technology adoption): An individual's tendency to be uneasy, aprehensive, or fearful about the current or future use of technology ((Parasuraman, et al,m 1990; Allen, 2002).

- Allen, J.W., and Parikh, M.A. "The Impact of Personal Traits on IT Adoption," Proceedings of the 8th Americas Conference on Information Systems, Dallas, TX, USA, 2002.

- Meuter, M.L., Ostrom, A.L., Bitner, M.J., and Rountree, R. "The Influence of Technology Anxiety on Consumer Use and Experiences with Self-Service Technologies," Journal of Business Research (56) 2003, pp 899-906.

- Parasuraman, S., and Igbaria, M. "An Examination of Gender Differences in the Determinants of Computer Anxiety and Attitudes Towards Microcomputers Among Managers," International Journal of Man-Machine Studies (32:3) 1990, pp 327-340.

Monday, June 6, 2016

AACSB Impact Forum

The College of Business at Tarleton State University is pushing to obtain accreditation from the Association to Advance Collegiate Schools of Business (AACSB), the premier accrediting body for business schools. Before this process began, I knew that AACSB existed. I knew the general idea behind accreditation. But, I can honestly say that I did not know the degree to which they can drive value within a business school by helping to establish and maintain standards by which schools can first be measured and second, continuously improve.

As part of our push for accreditation, this last weekend, I attended one of their "Impact Forums" held at their international headquarters in Tampa Florida. They have identified impact as one of the three pillars of accreditation along with engagement and innovation. But impact is something that can be a little nebulous at times. After all, how do we know that our teaching is having real "impact"? Hence, the reason behind the forum.

So, what is "impact" and how does it apply to the college of business? Well, impact is pretty easy to define: to have a strong effect on something or someone. But, how does that apply to the college of business? Well, we want our teaching, research, and service to have impact. Of course, the assumption is that the impact is positive and hopefully it is.

Traditionally, as faculty, impact has been assessed by counting journal publications in peer reviewed journals and/or publications in high quality journals. Why? Because they are relatively easy to measure. But, we have to realize that impact has many different stakeholders. These might include faculty, staff, administrators, or the academy itself. Other stakeholders might include students, businesses, accrediting organizations, governing bodies, and so on.

So, we need to look at impact from a much broader perspective. Again, as it relates to research, while counting the number of peer reviewed journal publications a researcher had, additional impact information that might prove useful is whether or not other professors are using that work in their classes. Are businesses applying that research to their organization? That is real impact.

But, measuring such impact can prove difficult. Sometimes it takes considerable time for impact to be seen. For example, sometimes research can take more than a year to get published. Beyond that, such research must then be disseminated to various stakeholders for them to begin to be influenced by the results. Ultimately, it may be 2 or 3 years later or more before an impact can be measured. Even then, whether the impact is explicit or implicit can make measuring them difficult at best.

What about other kinds of impact though? Turns out, they are all around us. Some of them are quite easy to measure. We just need to rethink how we look at impact. For example, the number of degrees granted and student placement success (along with research quality and quantity) are the most commonly used impact metrics. These are followed by the number of consulting projects and applied research, rankings, surveys and feedback from key stakeholders, and community engagement and student projects. Finally, we have things like assurance of learning data, alumni engagement, and so on that can serve as representing impact.

So, the scope of impact is much broader than how it might have originally been interpreted. In academia, we impact all three areas: research, teaching, and service. The key is to recognize when we are having an impact and weaving it into a coherent story that helps us to tell our story as an institution.

With such a large potential scope of impact with which to tell our story, how do you know where to start? Start with your mission statement. It tells stakeholders what you value the most. If that is where your value is, that is where the focus of your impact measurements should be. For example, if you are a teaching school and you value innovative teaching, you should probably focus less on measuring and reporting the impact of research. That is not to say that research should be completely ignored. It just should not be "featured".

So, start with your mission and align appropriate metrics to assess impact. Identify what is impacted, by what, how, what the measures are, and how often they are to be measured. You are painting a road map here so that you can create repeatable results. In the end, this does not have to be a painful process. You do have openly and honestly reflect on your college. But, I think in the long run, it creates an environment for continuous improvement that keeps people engaged and excited about coming to work and doing great things!

As part of our push for accreditation, this last weekend, I attended one of their "Impact Forums" held at their international headquarters in Tampa Florida. They have identified impact as one of the three pillars of accreditation along with engagement and innovation. But impact is something that can be a little nebulous at times. After all, how do we know that our teaching is having real "impact"? Hence, the reason behind the forum.

So, what is "impact" and how does it apply to the college of business? Well, impact is pretty easy to define: to have a strong effect on something or someone. But, how does that apply to the college of business? Well, we want our teaching, research, and service to have impact. Of course, the assumption is that the impact is positive and hopefully it is.

Traditionally, as faculty, impact has been assessed by counting journal publications in peer reviewed journals and/or publications in high quality journals. Why? Because they are relatively easy to measure. But, we have to realize that impact has many different stakeholders. These might include faculty, staff, administrators, or the academy itself. Other stakeholders might include students, businesses, accrediting organizations, governing bodies, and so on.

So, we need to look at impact from a much broader perspective. Again, as it relates to research, while counting the number of peer reviewed journal publications a researcher had, additional impact information that might prove useful is whether or not other professors are using that work in their classes. Are businesses applying that research to their organization? That is real impact.

But, measuring such impact can prove difficult. Sometimes it takes considerable time for impact to be seen. For example, sometimes research can take more than a year to get published. Beyond that, such research must then be disseminated to various stakeholders for them to begin to be influenced by the results. Ultimately, it may be 2 or 3 years later or more before an impact can be measured. Even then, whether the impact is explicit or implicit can make measuring them difficult at best.

What about other kinds of impact though? Turns out, they are all around us. Some of them are quite easy to measure. We just need to rethink how we look at impact. For example, the number of degrees granted and student placement success (along with research quality and quantity) are the most commonly used impact metrics. These are followed by the number of consulting projects and applied research, rankings, surveys and feedback from key stakeholders, and community engagement and student projects. Finally, we have things like assurance of learning data, alumni engagement, and so on that can serve as representing impact.

So, the scope of impact is much broader than how it might have originally been interpreted. In academia, we impact all three areas: research, teaching, and service. The key is to recognize when we are having an impact and weaving it into a coherent story that helps us to tell our story as an institution.

With such a large potential scope of impact with which to tell our story, how do you know where to start? Start with your mission statement. It tells stakeholders what you value the most. If that is where your value is, that is where the focus of your impact measurements should be. For example, if you are a teaching school and you value innovative teaching, you should probably focus less on measuring and reporting the impact of research. That is not to say that research should be completely ignored. It just should not be "featured".

So, start with your mission and align appropriate metrics to assess impact. Identify what is impacted, by what, how, what the measures are, and how often they are to be measured. You are painting a road map here so that you can create repeatable results. In the end, this does not have to be a painful process. You do have openly and honestly reflect on your college. But, I think in the long run, it creates an environment for continuous improvement that keeps people engaged and excited about coming to work and doing great things!

Wednesday, February 24, 2016

What is a router and what does it do?

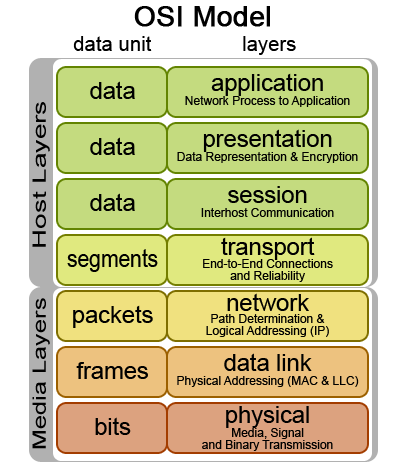

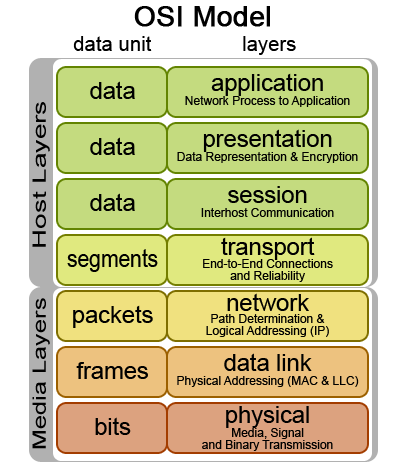

In working with students on their logical designs, it has become clear to me that many students get confused about the differences between routers and switches. So, I wanted to take a moment to talk specifically about routers. So, what is a router? Well, a router links together two similar networks in order to direct IP packets from one network to another. When you see IP, you should automatically start thinking about the OSI model and specifically, layer 3 of the OSI model.

Typically, when students start creating their logical diagram, if they include logical configuration information at all, they always seem to focus on the internal side of this connection; the LAN side. But there is the WAN side of this connection as well. What kind of configuration information is on the WAN side of the connection? It (hopefully) obviously needs an IP address. Just to make this clear, every device connected to the Internet needs a unique IP address in order to be able to communicate with other devices over the Internet. The WAN connection also needs a subnet identified. The subnet is important because it tells the router on the WAN side of the connection which part of the IP address refers to the network and which part refers to the router itself (on the WAN side). The WAN side also needs a default gateway. If the router does not know where to route a particular packet, it needs the default gateway to forward the packet to a higher layer router that may in fact know where that particular packet should be sent. Lastly, the WAN side of the connection needs to know a DNS server so that it can resolve domain names and share that information with clients on the LAN side.

Now, the good news is that while you need to know how to configure these settings, you do not have to come up with the numbering scheme itself. If you have a static connection, this information will be provided to you by your ISP. This is typically the case for business class services in which you need your external address to stay the same so that web server, email servers, and so on can routinely be found in the same place logically. Consumers typically have dynamic addresses and this really makes things easy as the router reaches out to the ISP and obtains this information automatically. The advantage of this is obviously that it reduces your administrative burden.

Figure 2 below shows a router where the WAN port is labeled 'Line' and then it also happens to have a four port switch built into the router, with each port labeled 1 through 4. So, the WAN configuration information is associated with the WAN or 'Line' side of the router whereas the LAN side of the configuration information is associated with the clients associated with the four ports.

On the internal side of the network, we also have configuration information that is needed. Specifically, we need an IP address and a subnet mask to tell the router which portion of the IP address represents the network and which part represents the router itself. The IP address serves as the default gateway for all the clients on the internal side of the network. Where is the rest of the configuration information internally? It's not there. When a client on the internal side of the network does not know where the server is that it is trying to communicate with, it sends the packet to its default gateway which is found in its own configuration information. That gateway is the router's LAN connection. When the router gets that request, if the requested server is not on the LAN side of the connection, it routes the packet to the WAN side of the connection which includes a default gateway so that it knows where to forward the packet.

Figure 3 illustrates a sample logical diagram. In this diagram, for the gateway router, you should see that the WAN connection is dynamically set. Again, this makes it easy on the network administrator. Internally, there is an IP address and a Subnet Mask (SM). There is also an SSID to indicate that this is also a wireless router as well as information indicating that this wireless router is capable as serving as a DHCP server.

Figure 1: The OSI Model

Typically, when students start creating their logical diagram, if they include logical configuration information at all, they always seem to focus on the internal side of this connection; the LAN side. But there is the WAN side of this connection as well. What kind of configuration information is on the WAN side of the connection? It (hopefully) obviously needs an IP address. Just to make this clear, every device connected to the Internet needs a unique IP address in order to be able to communicate with other devices over the Internet. The WAN connection also needs a subnet identified. The subnet is important because it tells the router on the WAN side of the connection which part of the IP address refers to the network and which part refers to the router itself (on the WAN side). The WAN side also needs a default gateway. If the router does not know where to route a particular packet, it needs the default gateway to forward the packet to a higher layer router that may in fact know where that particular packet should be sent. Lastly, the WAN side of the connection needs to know a DNS server so that it can resolve domain names and share that information with clients on the LAN side.

Now, the good news is that while you need to know how to configure these settings, you do not have to come up with the numbering scheme itself. If you have a static connection, this information will be provided to you by your ISP. This is typically the case for business class services in which you need your external address to stay the same so that web server, email servers, and so on can routinely be found in the same place logically. Consumers typically have dynamic addresses and this really makes things easy as the router reaches out to the ISP and obtains this information automatically. The advantage of this is obviously that it reduces your administrative burden.

Figure 2 below shows a router where the WAN port is labeled 'Line' and then it also happens to have a four port switch built into the router, with each port labeled 1 through 4. So, the WAN configuration information is associated with the WAN or 'Line' side of the router whereas the LAN side of the configuration information is associated with the clients associated with the four ports.

Figure 2: Router

On the internal side of the network, we also have configuration information that is needed. Specifically, we need an IP address and a subnet mask to tell the router which portion of the IP address represents the network and which part represents the router itself. The IP address serves as the default gateway for all the clients on the internal side of the network. Where is the rest of the configuration information internally? It's not there. When a client on the internal side of the network does not know where the server is that it is trying to communicate with, it sends the packet to its default gateway which is found in its own configuration information. That gateway is the router's LAN connection. When the router gets that request, if the requested server is not on the LAN side of the connection, it routes the packet to the WAN side of the connection which includes a default gateway so that it knows where to forward the packet.

Figure 3 illustrates a sample logical diagram. In this diagram, for the gateway router, you should see that the WAN connection is dynamically set. Again, this makes it easy on the network administrator. Internally, there is an IP address and a Subnet Mask (SM). There is also an SSID to indicate that this is also a wireless router as well as information indicating that this wireless router is capable as serving as a DHCP server.

Figure 3: Logical Diagram

You may also notice a second, internal router is included in the diagram. This design is segmenting the network at layer 3. I want to draw your attention to the WAN side of the internal router. It includes the IP address and the default gateway. Specifically, I want to draw your attention to the IP address and the fact that it is on the same subnet as the internal side of the gateway router. This is necessary so that the two routers can communicate. Also, note the internal IP address of the internal router. Note that it is on a different subnet. As a result, it can be said that this network is segmented to keep traffic from the business side of the network separate of the traffic on the personal side of the network.

So, that is what a router is and some basic information about the configuration information that goes into a router and whatnot. Commercial routers get much more complicated quickly. But, at the same time, commercial routers are beginning to take on similar dashboards to consumer grade routers making them easier to configure and use. If you have any questions, please leave a comment. Follow me on Twitter @SchuesslerPhD and until next time, happy networking.

Dr. S.

Tuesday, January 19, 2016

PANs, LANs, and WANs, Oh My!

Trying to cover some of the basics this week in my undergraduate networking class. One question that regularly comes up is what the differences are between LANs and WANs. Well of course, if you are familiar with this concept, then the answer is simple. But for some of my students who are just starting out in technology in general and networking specifically, the delineations may not be quite so clear. Below, I have put together a short little video going over the characteristics of each and included some additional explanation for other types of networks.

Personal Area Networks (PANs): PANs are small networks, usually made up of BlueTooth devices to quickly and easily connect devices within a few feet of each other. We see examples such as BlueTooth headsets that link together with cell phones, BlueTooth Printers that wirelessly connect to computers to eliminate cables for printers. I have a little Google Nexus 7” tablet that connects via BlueTooth, eliminating the need to plug in any sort of cabling.

Local Area Networks (LANs): LANs are larger in scale. Though different texts will provide similar but often distinct definitions, recognize that it can sometimes be difficult to determine precisely what represents a LAN versus a CAN versus a PAN, etc. For the most part, a LAN exists on a single property where cables and wireless access points can be deployed without the need to lease lines from a carrier or obtain permission from anyone else. LANs will consist of two or more nodes to usually no more than a few hundred and they will usually stretch no more than a few hundred feet.

Campus Area Networks (CANs): CANs are larger still. Think of your local university or large corporate headquarters like Microsoft in Redmond Washington. These are areas that have a need for more than a couple hundred nodes on the network. Rather, they have a need to connect multiple LANs together yet all on contiguous property. This LAN of LANs as described here, can be referred to as a CAN.

Metropolitan Area Networks (MANs): MANs are larger in scope yet again; this time spanning the size of a city or even multiple cities. These are becoming more and more common. Denton and Granbury Texas both have MANs that provide services not only to citizens, but also to workers who require network access in the field.

Wide Area Networks (WANs): WANs are the ultimate in scope. They span larger regions still to potentially covering the planet. The Internet is the ultimate example of a WAN though certainly not the only WAN that exists. Many corporations lease lines in various cities around the world in order to establish their WAN. WANs often utilize public carriers such as AT&T, Sprint, and so on in order to capitalize on what they do well rather than have to become experts themselves at laying and maintaining lines around the world, a very expensive endeavor.

Subscribe to:

Posts (Atom)